How to Properly Use Robots.txt tester in Google Search Console?

Learn how to test your robots.txt file for making a perfect code by Google robots.txt tester in Blogger

Being a Webmaster you might little curious about Robots.txt,

because most of us confuse about this. Robots.txt is a file which one use by

Google Web crawlers from specific URLs on your site. And you can control Google

web crawler by using Robots.txt. So this is a strong tool for webmaster.

What is Robots.txt Tester?

If you know how to use Robots.txt or how to write Robots.txt

file then this is very easy for you about Robots.txt. But sometime you might

make mistakes while writing Robots.txt file and to help you with details information

that are you using correct or wrong Robots.txt. Generally this is only a

testing tool nothing else. If you thing after testing Robots.txt file in Google

search console it will automatically update your Robots.txt file on your site

then you are absolutely wrong. Because after testing the Robots.txt you have to

add it manually in your website.

How to test Robots.txt file by using Google Robots.txt tester?

Robots.txt tester is a Google search console’s tools so you

have to have a Google account which is registered with Google search console. Assume

that you have Google search Console account. And follow the below steps-

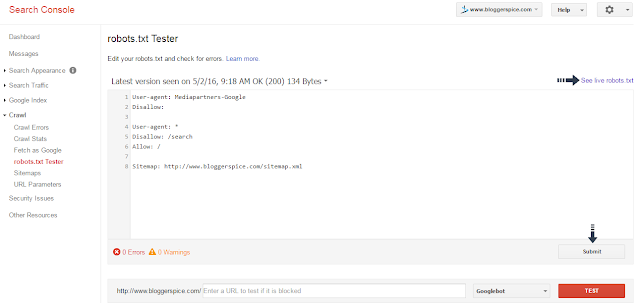

Step 1 Go to Google

Search Console and Log in there. Now enter into you Blog.

Step 2 From Search

Console Dashboard click Crawl to expend and click robots.txt Tester.

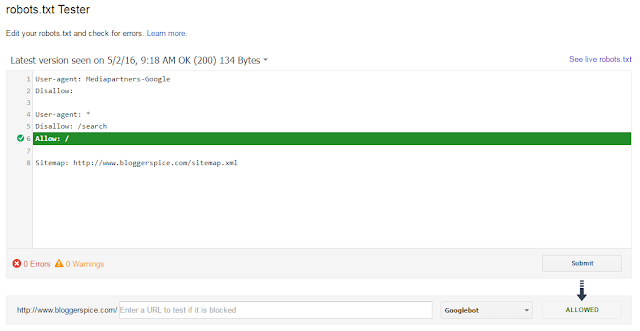

Step 3 Under robots.txt tester page locate Blank field. And type

your Robots.txt code line below-

User-agent: Mediapartners-GoogleDisallow:

User-agent: *Disallow: /searchAllow: /

Sitemap: http://www.bloggerspice.com/sitemap.xml

Step 4 Now simply click the TEST Button from the bottom of the

page. If Googlebot allowed then a Green signal will appear.

But it any URL blocked then it will display a red signal like below.

In

this case you have to write it again to make correction.

How to see Live robots.txt?

This is very simple to see live robots.txt and you can see it from robots.txt

tester page.

Step 1 Go to robots.txt

tester page and test the robots.txt file.

Step 2 After that just locate the See live robots.txt text

link from top of the screen and click on it.

You will be headed to live robots.txt

file on your site. But don’t worry this is just a demo not applied on your

site.

How to test Individual URL from your Website on robots.txt Tester?

Sometimes Google web crawler blocks our website’s

individual pages in this case we can test specific page by robots.txt tester. To test specific page please follow the below steps-

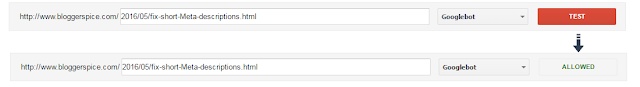

Step 1 Visit robots.txt

tester page on Google search Console and scroll down.

Step 2 After that just add your any post URL without domain

URL. You can see from below image. And click TEST button.

If Google web crawler allows your post URL then instead

of TEST text you will see ALLOWED button.

How to download robots.txt File from Tester?

After testing the robots.txt file you may want to use it in your website. You can

simply copy the code from robots.txt tester

field or download the robots.txt file

from robots.txt tester. To download

the robots.txt file please follow the

below steps-

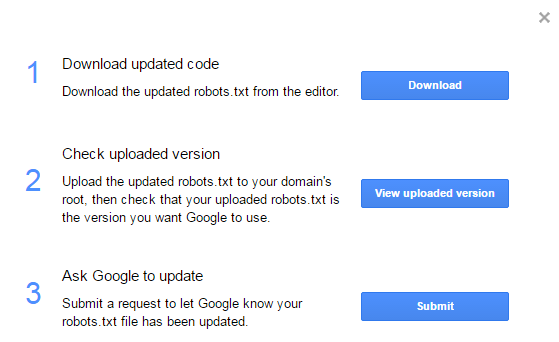

Step 1 Locate Submit

button from robots.txt tester. Instantly

a popup window will appear.

Step 2 From there Click Download

button and your robots.txt file will

download in notepad.

Step 3 If you want to see the uploaded version of your robots.txt file then click View uploaded version button.

Step 4 You can Ask Google to update robots.txt file by click on Submit

button from popup window.

That’s all about robots.txt tester and I have already mentioned above that this is just

tester and from here you can test your robots.txt

file but to make it work you must add it in your website server. In case of

Blogger you can add it on settings. I hope through this article you have got

details idea about robots.txt tester. Happy Blogging.

4 comments