How to Use Custom robots.txt Feature in Blogger BlogSpot?

Learn how to safely manage your robots.txt changes through Google Search Console. Blogspot Users Learn How To Add Custom Robots.Txt File Code in Blogger with Detailed Information about this Blogging Term. you can restrict search engine bots to crawl some directories and web pages or links of your website or blog by custom robots.txt.

Generally Robots.txt is a technical term of Google Blogger which helps to control search crawlers. Custom Robots.txt is very sensitive things and incorrect use of this feature can result a Blog being ignored by search engines.

So before implementing the Custom Robots.txt file in Blogger we should know about it properly. First I will share with you some basic knowledge about Robots.txt.

I think you are bit confuse, don’t worry let me explain the Custom Robots.txt File.

So before implementing the Custom Robots.txt file in Blogger we should know about it properly. First I will share with you some basic knowledge about Robots.txt.

What is Robots.txt File?

A Robots.txt file is an automatically generated by

Blogger for giving details information to search Robot about Blog posts. As a

result when Search Robots from various search engines like Google, Bing, Yahoo,

Yandex etc. visits your site then Robots.txt tells search crawler what to crawl

and what not to crawl. Even by using Robots.txt file we can tell search engine

which URL they should index and which URL they should ignore.

Correct Custom Robots.txt File for Blogger

This is bit confusing that what is correct or how to

write a correct Robots.txt file? A Robots.txt file we can be written different

ways. The correct form of Custom Robots.txt file is below-

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://www.bloggerspice.com/sitemap.xml

I think you are bit confuse, don’t worry let me explain the Custom Robots.txt File.

What is User-agent:*?

This is the first line of Custom Robots.txt File and with * (wildcard)

it is indicating that this section applies to all robots. Simply it will tell

all robots from search engine that this Custom Robots.txt File is apply to all search

engines.

Function of Disallow: /search

Disallow keyword will tell the

search robot that it should not visit any page on this site. And I have use /search this means it will tell robots

to not crawl your site search result page in search engine. This means if your

site visitors’ make any search on your site and then the search result page won’t

index and crawl in search engine.

Function of Allow: /

This Allow: / keyword tell

search robot to visit page on your website.

Meaning of Sitemap:

This means your website’s sitemap and though this is after Allow:/ keyword so it is telling the

search robots to index and crawl all posts of your site. As a result, search

robots will visit your entire site and collect information about your posts and

URLs.

Sitemap Confusion

Formerly huge Blogger who has written about Custom Robots.txt File and

they have use sitemap like below-

- Sitemap: http://bloggerspice.com/feeds/posts/default?orderby=UPDATED

This was the correct form formerly because in past Google Sitemap index

only 25 posts. As a result, if we don’t use the sitemap like above then search

robots able to crawl only 25 posts from a site. But we know Google has updated their Sitemap

generating system. Now Google can automatically generate sitemaps in simple

way. For this reason now we will use sitemap.xml like below.

- Sitemap: http://www.bloggerspice.com/sitemap.xml

I hope now you don’t have any confusion about sitemap.

Test your Custom Robots.txt File?

Testing Custom Robots.txt File is necessary for integrating the correct Custom

Robots.txt File in Blogger site. You can simply test your Robots.txt File from

Google search console. For testing details please visit the below tutorial

about testing your Custom Robots.txt File.

Do you want to test your Custom Robots.txt file? Then Please visit below URL.

How to Properly Use Robots.txt tester in Google Search Console?

How to Properly Use Robots.txt tester in Google Search Console?

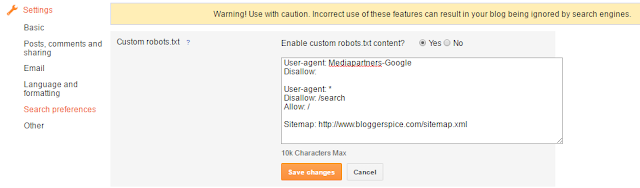

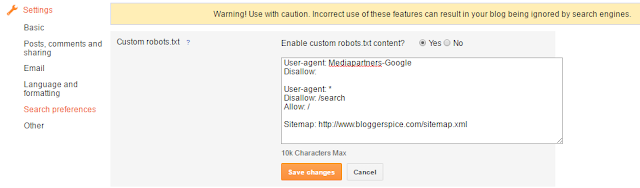

How To Add Custom Robots.txt File correctly In Blogger?

After testing the Custom Robots.txt File now it’s time to integrating in

your Blogger site. For adding Custom Robots.txt File on Blogger site just

follow the below steps-

Step #1: Log in to your Blogger Account and Go

to your Blogger Dashboard

Step #2: Click on -> Settings -> Search

preferences

Step #3: Now from

Crawlers and indexing locate Custom

robots.txt and click on Edit link.

Step #4: An Option will visible like Enable

custom robots.txt? And select Yes radio button.

Step #5: Now copy your custom

robots.txt file and paste it inside the box. Please alter the www.bloggerspice.com with your Blog URL.

Step #6: After that press orange color Save

changes button to set the custom robots.txt

for your Blog.

That’s

it. You have successfully added custom robots.txt file in your Blog. Now search

robot will able to work according to your robots.txt file text and I know you

have enjoyed the tutorial. Are

you still confused? Then hit me with a comment. Thank you.

8 comments

for creating a sitemap just submit your site to Google search Console. Just visit the below URL and follow the recommended method only. I will update that post tonight. Sitemap submittion process has changed.

http://www.bloggerspice.com/2015/11/why-google-indexing-only-151-links-how-to-fix-this.html

recommended method is http://www.bloggerspice.com/sitemap.xml

replace bloggerspice with your domain name.

Thanks.

FIX ROBOTS TXT IN BLOGGER